Latest News

Olivier Pietquin from Deepmind and University Lille 1 (France) will give an invited talk at AWRL 2016!

Title: Closing the interaction loop with (inverse) reinforcement learning

Abstract: Modern interactive systems are mostly based on an aggregation of Machine Learning (ML) modules that are trained on batch data (e.g. automatic speech, gesture or emotion recognition, language understanding or generation, text-to-speech synthesis, etc.). Yet, closing the interaction loop with ML-based techniques is still an issue because keeping the human in the learning loop raises new challenges for ML (such as non-stationarity, subjective evaluation, risk aversion, safe exploration, etc.). In this talk, I will discuss a Reinforcement Learning (RL) approach to this problem and show how some of these challenges can be addressed with direct and especially inverse RL methods in the context of Markov Decision Processes.

Lihong Li from Microsoft Research will give an invited talk at AWRL 2016!

Program

- 07:40 Registration Open - S Block Foyer

- 08:50-09:00 Introduction

- 09:00-10:00 Keynote 1 by Olivier Pietquin (Deepmind and University Lille 1, France)

- 10:00-10:20 Break

- 10:20-12:00 Session 1 (4 talks, 20m + 5m questions each)

- Reinforcement-based Simultaneous Algorithm and its Hyperparameters Selection by Valeria Efimova, Andrey Filchenkov, Anatoly Shalyto

- Quantile Reinforcement Learning by Hugo Gilbert and Paul Weng

- Reinforcement Learning with Skew-symmetric Bilinear Utility Functionals by Hugo Gilbert and Paul Weng

- Reinforcement Learning Approach for Parallelization in Filters Aggregation Based Feature Selection Algorithms by Ivan Smetannikov, Ilya Isaev and Andrey Filchenkov

- 13:00-14:00 ACML Industry Keynote (Room: S.1.04)

- 14:15-15:15 Keynote 2 by Lihong Li (Microsoft)

- 15:15-15:40 Session 2 (1 talk, 20m + 5m questions)

- Learning Functional Meta-Policy over Parameterized Task Distribution by Qing Da, Yang Yu and Zhi-Hua Zhou

- 15:40-16:00 Break

- 16:00-16:50 Session 3 (2 talks, 20m + 5m questions each)

- Extracting Actionability from Machine Learning Models by Sub-optimal Deterministic Planning by Qiang Lyu, Yixin Chen, Zhaorong Li, Zhicheng Cui, Ling Chen, Xing Zhang and Haihua Shen

- Classification-based Optimization for Direct Policy Search by Yang Yu

- 16:50-17:40 Free discussions

Motivation

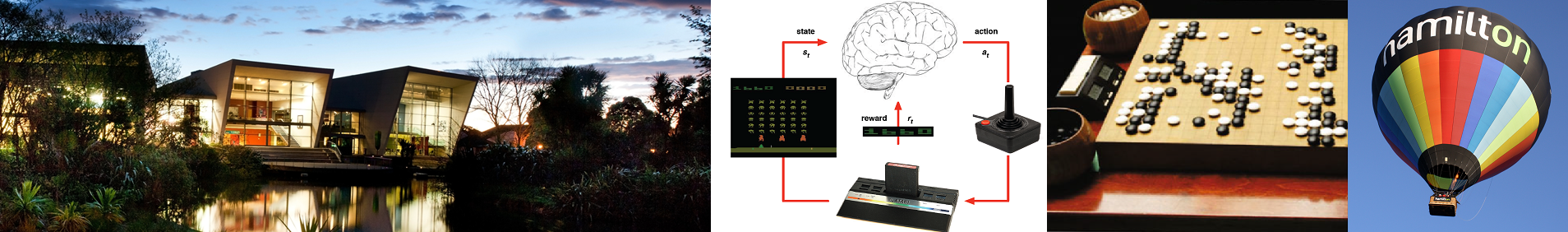

The first Asian Workshop on Reinforcement Learning (AWRL 2016) focuses on both theoretical models and algorithms of reinforcement learning (RL) and its practical applications. In the last few years, we have seen the growing interest in RL of researchers from different research areas and industries. We invite reinforcement learning researchers and practitioners to participate in this world-class gathering. We intend to make this an exciting event for researchers and practitioners in RL worldwide, not only for the presentation of top quality papers, but also as a forum for the discussion of open problems, future research directions and application domains of RL. AWRL 2016 will consist of keynote talks (TBA), contributed paper presentations, discussion sessions spread over a one-day period.

Reinforcement learning (RL) is an active field of research that deals with the problem of (single or multiple agents') sequential decision-making in unknown possibly partially observable domains, whose (potentially non-stationary) dynamics may be deterministic, stochastic or adversarial. RL's objective is to develop agents' capability of learning optimal policies in unknown environments (possibly in face of other coexisting agents) by trial-and-error and with limited supervision. Recent developments in exploration-exploitation, online learning, planning, and representation learning are making RL more and more appealing to real-world applications, with promising results in challenging domains such as recommendation systems, computer games, or robotics systems. We would like to create a forum to discuss interesting results both theoretically and empirically related with RL. The ultimate goal of this workshop is to bring together diverse viewpoints in the RL area in an attempt to consolidate the common ground, identify new research directions, and promote the rapid advance of RL research community.

Topics

- Exploration/Exploitation

- Function approximation in RL

- Deep RL

- Policy search methods

- Batch RL

- Kernel methods for RL

- Evolutionary RL

- Partially observable RL

- Bayesian RL

- Multi-agent RL

- RL in non-stationary domains

- Life-long RL

- Non-standard Criteria in RL, e.g.:

- Risk-sensitive RL

- Multi-objective RL

- Preference-based RL

- Transfer Learning in RL

- Knowledge Representation in RL

- Hierarchical RL

- Interactive RL

- RL in psychology and neuroscience

- Applications of RL, e.g.:

- Recommender systems

- Robotics

- Video games

- Finance

Paper Submission

Workshop submissions and camera ready versions will be handled by EasyChair. Click here for submission.

Papers should be formatted according to the ACML formatting instructions for the Conference Track. Submissions need not be anonymous.

AWRL is a non-archival venue and there will be no published proceedings. However, the papers will be posted on this website. Therefore it will be possible to submit to other conferences and journals both in parallel to and after AWRL 2016. Besides, we also welcome submissions to AWRL that are under review at other conferences and workshops. For this reason, please feel free to submit either anonymized or non-anonymized versions of your work. We have enabled anonymous reviewing so EasyChair will not reveal the authors unless you chose to do so in your PDF.

At least one author from each accepted paper must register for the workshop. Please see the ACML 2016 Website for information about accommodation and registration.

Important Dates

- Submission deadline: Aug. 31, 2016

- Notification of acceptance: Oct. 10, 2016

- Camera ready deadline: Oct. 24, 2016

- Workshop date: Nov. 16, 2016

- ACML dates: Nov. 16-18, 2016

Organizing Committee

Jianye Hao, Tianjin University, China

Paul Weng, SYSU-CMU Joint Institute of Engineering, China

Yang Yu, Nanjing University, China

Zongzhang Zhang, Soochow University, China